Use Cases

Docs

Blog Articles

BlogResources

Pricing

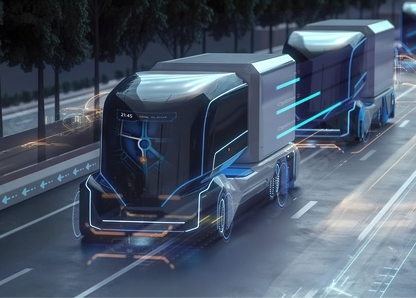

PricingNext-Generation Infrastructure for Distributed Data Processing and AI/ML

Lightweight, secure, ultra fast, economical platform for

building edge-to-core operational data pipelines

What is InfinyOn?

InfinyOn provides a suite of products designed to streamline and enhance data pipeline processes, helping companies manage data from diverse sources efficiently.

- Fluvio

- A lightweight, distributed data streaming engine optimized for resource efficiency and edge-readiness.

- Stateful Data Flow

- Facilitates the creation of intricate data processing flows from various sources with asynchronous state retention.

- Connectors

- Tools to build and extend connectivity with any digital system, using Fluvio Connectors Development Kit.

- Edge-Ready

- Deployable on ARM64 hardware and edge devices, ensuring seamless operation in an IoT environment.

- Cloud-Native & AI Native

- Seamlessly operational across cloud platforms and integrates with AI workflows.

- Secure

- Built with Rust and Wasm for memory safety, secure execution, reliability, and data integrity.

Key Benefits of InfinyOn

-

Integrated

Data PipelineA single modular system for data collection, enrichment, transformation, and analysis.

-

Extreme

Resource EfficiencyConsumes minimal compute, memory, and network resources for optimal performance.

-

Flexibility

& ConnectivitySupports custom connectors for unlimited integration capabilities with any digital system.

Performance & Scalability

Rapid Data Processing

Process millions of records in single-digit seconds, ensuring swift data analysis and insights.

High Throughput

Scale up to handle hundreds of thousands of records per second, meeting demanding data processing needs.

Seamless Integrations

Rapidly develop custom connectors with our Connector Development Kit, including HTTP, WebSocket, MQTT for IoT, and SQL-based databases.

Ease of Use

Focus on application logic with our unified system for data-to-dashboard integration, featuring modular and composable AI/ML data pipelines.

Ready to Transform Your Data Operations?

Testing 2 columns

This the title/description of this section, which can be as short or as long as desired.

Title for Item One

This is is description of item 1, which can be as short or as long as desirable.

Title for Item Two

This is is description of item 2, which can be as short or as long as desirable.

Title for Item Three

This is is description of item 3, which can be as short or as long as desirable.

Title for Item Four

This is is description of item 3, which can be as short or as long as desirable.

This the title/description of this section, which can be as short or as long as desired.

Title for Item One

This is is description of item 1, which can be as short or as long as desirable.

Title for Item Two

This is is description of item 2, which can be as little or as long as desirable.

Title for Item Three

This is is description of item 3, which can be as little or as long as desirable.

Building blocks of reliable dataflows

Collect your data with built-in connectors, webhooks, and IoT collectors, or build your own custom clients.

apiVersion: 0.1.0

meta:

version: 0.2.5

name: http-github

type: http-source

topic: github-events

secrets:

- name: GITHUB_TOKEN

http:

endpoint: 'https://api.github.com/repos/infinyon/fluvio'

method: GET

headers:

- 'Authorization: token ${{ secrets.GITHUB_TOKEN }}'

interval: 30sapiVersion: 0.1.0

meta:

version: 0.2.5

name: helsinki-mqtt

type: mqtt-source

topic: helsinki

mqtt:

url: "mqtt://mqtt.hsl.fi"

topic: "/hfp/v2/journey/ongoing/vp/+/+/+/#"

timeout:

secs: 30

nanos: 0

payload_output_type: jsonapiVersion: 0.1.0

meta:

name: my-stripe-webhook

topic: payment_confirmations

secrets:

- name: stripe_secret_key

webhook:

verification:

- uses: infinyon/[email protected]

with:

webhook_key: ${{ stripe_secret_key }}## Cloud Collector

$ fluvio topic create collector-topic --mirror

$ fluvio topic collector-topic --mirror-add 6001

$ fluvio cloud register cluster --id 6001 --source-ip 35:42:12:192

$ fluvio cloud cluster metadata export --topic local-topic \

--mirror-spu 6001 --file remote-6001.toml

## IoT Sensor (ARMv7, ARM64, etc.)

$ fluvio cluster start --local --config remote-6001.toml

## Produce at Edge

$ fluvio produce local-topic

> test from 6001

## Read from Cloud

$ fluvio consume collector-topic -B

test from 6001use fluvio::{Fluvio, RecordKey};

#[async_std::main]

async fn main() {

let topic = "rust-topic";

let record = "Hello from rust!";

let fluvio = Fluvio::connect().await.unwrap();

let producer = fluvio::producer(topic).await.unwrap();

producer.send(RecordKey::NULL, record).await.unwrap();

producer.flush().await.unwrap();

}#!/usr/bin/env python

from fluvio import Fluvio

if __name__ == "__main__":

topic = "python-topic";

record = "Hello from rust!";

fluvio = Fluvio.connect()

producer = fluvio.topic_producer(topic)

producer.send_string(record)

producer.flush()import Fluvio from "@fluvio/client";

const produce = async () => {

const TOPIC_NAME = "node-topic";

const RECORD_TEXT = "Hello from node!"

await fluvio.connect();

const producer = await fluvio.topicProducer(TOPIC_NAME);

await producer.send(RECORD_TEXT);

};

const fluvio = new Fluvio();

produce();Coming soon ...

Apply transformations in-line as your data traverses the dataflows. Use pre-built SmartModules from the Hub or build your own with SMDK.

# Filter out all records that fail to match `Cat` or `cat`

transforms:

- uses: infinyon/[email protected]

with:

regex: "[Cc]at"# JOLT: JSON Language for Transformations

transforms:

- uses: infinyon/[email protected]

with:

spec:

- operation: remove

spec:

id: ""

- operation: shift

spec:

"*": "data.&0"

- operation: default

spec:

data:

source: "http-connector"# Tranform JSON records into SQL statements (used with sql-sink)

transforms:

- uses: infinyon/[email protected]

with:

mapping:

table: "target_table"

map-columns:

"device_id":

json-key: "device.device_id"

value:

type: "int"

required: true

"record":

json-key: "$"

value:

type: "jsonb"

required: true# Look at last 10 records.

# Filter out based based on your custom business logic.

transforms:

- uses: infinyon/[email protected]

lookback:

last: 10## Use smartmodule developer kit (smdk) to build your own.

$ fluvio install smdk

## Create your smarmodule project

$ smdk generate

🤷 Project Name: my-smartmodule

🤷 Which type of SmartModule would you like?

❯ filter

map

filter-map

array-map

aggregate

## Build & Test

$ smdk build

$ smdk test

## Load to Cluster

$ smdk load

## Publish to Hub

$ smkd publishApply duplication for exactly-once semantics. Choose our certified pre-built algorithms or build your own.

# Deduplication configuration file

# - topic-config.yaml

name: dedup

deduplication:

bounds:

count: 1000000

age: 1w

filter:

transform:

uses: infinyon-labs/[email protected]# apply dedup configuration

$ fluvio topic apply --config topic-config.yaml

# produce

$ fluvio produce dedup --key-separator ":"

1: one

1: one

2: two

# consume

$ fluvio consume dedup -B -k

1: one

2: twoMaterialized views are in early development and the interfaces presented here are subject to change. Join us on Discord to give us feedback.

# Materialized view configuration file

# - mv.yaml

apiVersion: 0.1.0

meta:

name: avg_bus_speed

materialize:

window:

type: tumbling

bound: "5min"

trigger: events

input:

topic: helsinki

schema:

- name: vehicle_id

type: u16

- name: speed

type: float

output:

fields:

- name: vehicle_id

- name: avg_speed

compute:

uses: "infinyon-labs/[email protected]"

with:

group_by:

- vehicle_id

operation:

type: average

param: speed

result: avg_speed# create materialized view

$ fluvio materialized-view create --config mv.yaml

# view materialized view current state

$ fluvio state avg_bus_speed

{

[

{

"vehicle_id": "123",

"avg_speed": 10.0

},

{

"vehicle_id": "456",

"avg_speed": 35.0

}

]

}Send data to multiple destinations with build-in certified connectors, your own custom connectors, or your full featured consumer clients.

apiVersion: 0.1.0

meta:

version: 0.2.5

name: my-http-sink

type: http-sink

topic: http-sink-topic

secrets:

- name: AUTHORIZATION_TOKEN

http:

endpoint: "http://127.0.0.1/post"

headers:

- "Authorization: token ${{ secrets.AUTHORIZATION_TOKEN }}"

- "Content-Type: application/json"apiVersion: 0.1.0

meta:

version: 0.3.3

name: json-sql-connector

type: sql-sink

topic: sql-topic

secrets:

- name: DATABASE_URL

sql:

url: ${{ secrets.DATABASE_URL }}

transforms:

- uses: infinyon/json-sql

with:

mapping:

table: "topic_message"

map-columns:

"device_id":

json-key: "device.device_id"

value:

type: "int"

default: "0"

required: true

"record":

json-key: "$"

value:

type: "jsonb"

required: trueapiVersion: 0.1.0

meta:

version: 0.1.2

name: weather-monitor-sandiego

type: graphite-sink

topic: weather-ca-sandiego

graphite:

# https://graphite.readthedocs.io/en/latest/feeding-carbon.html#step-1-plan-a-naming-hierarchy

metric-path: "weather.temperature.ca.sandiego"

addr: "localhost:2003"apiVersion: 0.1.0

meta:

version: 0.1.0

name: duckdb-connector

type: duckdb-sink

topic: fluvio-topic-source

duckdb:

url: 'local.db' # local duckdbuse async_std::stream::StreamExt;

use fluvio::{Fluvio, Offset};

#[async_std::main]

async fn main() {

let topic = "rust-topic";

let partition = 0;

let fluvio = Fluvio::connect().await.unwrap();

let consumer = fluvio::consumer(topic, partitino).await.unwrap();

let mut stream = consumer.stream(Offset::from_end(1)).await.unwrap();

if let Some(Ok(record)) = stream.next().await {

let string = String::from_utf8_lossy(record.value());

println!("{}", string);

}

}#!/usr/bin/env python

from fluvio import Fluvio, Offset

if __name__ == "__main__":

topic = "python-topic";

partition = 0;

fluvio = Fluvio.connect()

consumer = fluvio.partition_consumer(topic, partition)

for idx, record in enumerate( consumer.stream(Offset.from_end(10)) ):

print("{}".format(record.value_string()))

if idx >= 9:

breakimport Fluvio, { Offset, Record } from "@fluvio/client";

const consume = async () => {

const TOPIC_NAME = "hello-node";

const PARTITION = 0;

await fluvio.connect();

const consumer = await fluvio.partitionConsumer(TOPIC_NAME, PARTITION);

await consumer.stream(Offset.FromEnd(), async (record: Record) => {

console.log(`Key=${record.keyString()}, Value=${record.valueString()}`);

process.exit(0);

});

};

const fluvio = new Fluvio();

consume();Coming soon ...

## Use connector developer kit (cdk) to build your own.

$ fluvio install cdk

## Create your connector project

$ cdk generate

🤷 Project Name: my-connector

🤷 Which type of Connector would you like [source/sink]? ›

source

❯ sink

## Build & Test

$ cdk build

$ cdk test

## Deploy on your machine

$ cdk deploy

## Publish to Hub

$ cdk publish